MIDI 2.0: Great leap forward or too little, too late?

The winter NAMM show was an opportunity for the MIDI Manufacturers Association to get all their members together to hash out the realities of the long-awaited MIDI 2.0. The question is – are we that bothered?

“MIDI Sucks”

Two years ago I wrote an article about the future of MIDI with the rather click-baited title of “MIDI Sucks, so why do we put up with it?”. In it I suggested that although MIDI is an awesome thing there are elements that are restrictive, frustrating or just a bit dodgy. In our little corner of the internet it caused a bit of stir as people quite rightly came to an often emotional defence of the ancient 5-pin DIN-based technology. At that time MIDI 2.0 or “HD MIDI” was still a bit of a pipedream but it alluded towards a better future where all MIDI devices were intelligent or at least could do basic 21st century things like communicating in both directions at once and announcing what they were.

Two years down the road, MIDI 2.0 is hitting the prototype stage and looking back, I’m prompted to ask the question: who is it for?

What I mean is that so many of the responses I got to the original article were about how happy people were with MIDI. It makes me wonder how many people actually have a MIDI 2.0 shaped hole in their studios or their workflow?

First, let’s look at what the MIDI 2.0 NAMM conference brought up.

MIDI 2.0

There was a lot of helpful MIDI 2.0 clarification and bullet points.

- Will it need a new cable? No, it doesn’t care what cable (or “Transport”) you use as long as it’s compatible at each end. We already have 5-pin DIN, TRS minijack, USB and so on.

- Can it work now with existing Transports? No, new specifications need to be written for each.

- Will it provide better timing? Yes.

- Can it provide better resolution? Yes. There’s a lot about MPE and individual note control but existing messages get an upgrade. Velocity will be 16bit, Poly and Channel Pressure and Pitch Bend will be 32bit.

- Can it cope with microtonal and non-Western scales? Oh yes.

Then we have the three Bs:

- Bidirectional – MIDI changes from being a monologue to being a dialogue (nice).

- Backwards compatible – communication can drop back to MIDI 1.0 if necessary.

- Both – any improvements should also aim to enhance MIDI 1.0.

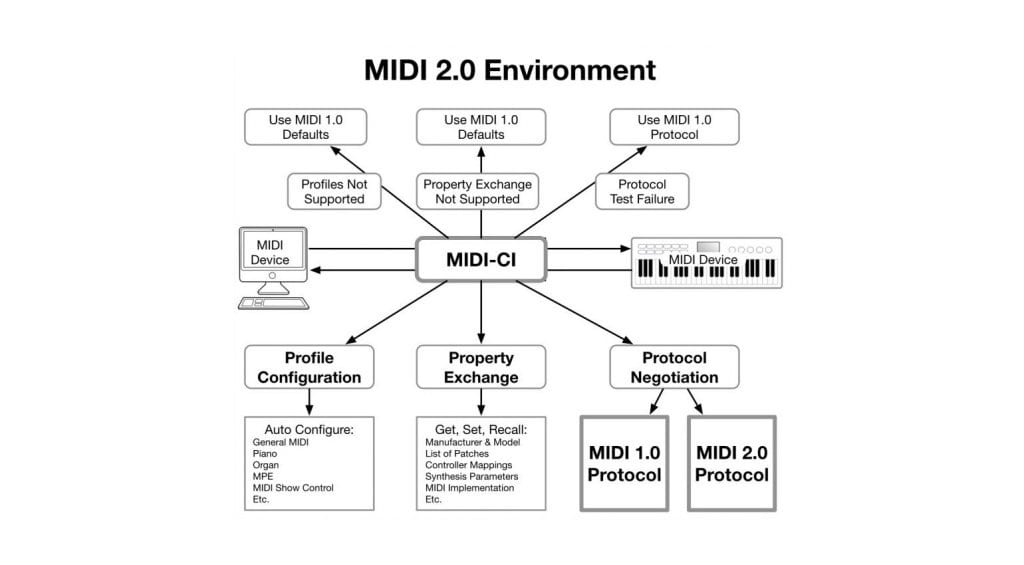

Followed by the three Ps:

- Profile Configuration – Profiles defining how a MIDI device responds to messages.

- Property Exchange – Messages that relay what a device does and its settings.

- Protocol Negotiation – How to negotiate MIDI 2.0 or drop to MIDI 1.0.

So, in essence, MIDI 2.0 aims to provide two-way communications between MIDI devices that reveal who they are and what they do. Profiles will allow for automatic and global mapping of parameters and at all times it will be able to drop to MIDI 1.0 if MIDI 2.0 doesn’t work. Support for higher resolution data and individual note MPE will be baked in.

Who is it for?

These are all great and useful things but I wonder whether existing technology has already overtaken this future. MPE already works within the current MIDI 1.0 specification by using a number of MIDI channels to send different note data and increasingly DAWs offer ways to record and edit it. Bidirectional control that reveals parameters is available in devices that support OSC and is already used in many controllers, particularly those built for Ableton Live.

Hardware controllers are increasingly integrated with DAWs and other pieces of software. I recently picked up a PreSonus ATOM and it instantly works with the Studio One Impact XT drum machine without any setup or configuration. Consider the NKS system from Native Instruments where their controller keyboards map themselves automatically to any compatible instruments or effects. Or the similar VIP software from Akai or the DAW integration of Nektar controllers.

So many of the things that MIDI 2.0 offers are already available with the right choice of hardware. And it’s become quite innovative offering tightly integrated DAW controllers that are specific to their task rather than every controller manufacturer producing a generic, works with everything but not specific to anything MIDI 2.0 controller. Part of MIDI control within a studio or performance environment is how you put the hardware and software together. Those are worthwhile tasks that teach you a lot about your gear and how it works. Wouldn’t a controller that “just works” undermine that a little because sometimes I like taking the long way around? I sit at my MIDI keyboard and it plays what I want to play and the knobs control what I want them to control – what is it I’m missing from that scenario?

So again I wonder who this is for? Much of the driving force comes from the people behind ROLI. They want MPE technology to be integrated into all MIDI devices which make their own range of MPE controllers more compatible with a larger range of gear. From a general manufacturers point of view it gives them all a chance to sell us yet another controller, but this time with MIDI 2.0 stamped on the box. How much of this higher resolution and enhanced protocols am I actually going to use? It’s like how many people are finding that despite the almost limitless possibilities of composition in a DAW we actually quite enjoy a MIDI-free 8-step analogue sequencer.

MIDI 2.0: How much do we really need it?

So, in my last article, I argued that MIDI was a bit rubbish and needed an upgrade whereas now I’m asking whether we really need MIDI 2.0 at all. How contrary am I? MIDI 2.0, if it ever makes it into everyday MIDI devices could be awesome. And it will be at its best if we don’t even notice it. Things will just be simpler, more automatic, we’ll start forgetting that “MIDI Learn” ever existed and just get on with turning knobs and enjoying the connection. Because I have my doubts that MIDI 2.0 will change much for most people and with a bit of luck we’ll forget what all the fuss was about.

More information

- MIDI.org website

8 responses to “MIDI 2.0: Great leap forward or too little, too late?”

You are currently viewing a placeholder content from Facebook. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More InformationYou are currently viewing a placeholder content from Instagram. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More InformationYou are currently viewing a placeholder content from X. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More Information

I submit that the premise of your whole argument is flawed because of the contextual box you are put into by the physics of a keyboard.

As usual, MIDI is being discussed by a musician who uses a traditional keyboard controller.

Keyboards are the most restrictive, non-expressive musical instruments. You want evidence? Look at a piano, the keyboard which started the controller type.

The piano is a serial pitch device. Pitch goes up and down; it is bi-directional. A piano cannot play in between the 12 note scale.

Yes, MIDI 1.0 gave keyboards pitch, aftertouch, and velocity control. But these performance enhancements are not inherent in the physical design of keyboards.

Look at a wind instrument. These have the same serial pitch architecture, just like keyboards. But a wind instrument can vary pitch.

Finally look at a multi stringed instrument. It is a parallel pitch device. Depending upon the number of strings (n), it is like a set of (n) serial pitch devices laid out in parallel. And each of these is like a wind instrument; it can vary pitch individually. And that variable pitch is built into the physics of the instrument.

So for example a guitar can play a C major chord, adding a pitch bend on 1, 2 3, … n strings. Moreover, the pitch bend variation can be different on different strings.

A keyboard player just cannot emulate this behavior. In fact, addition of all controller variations (outside of note on/off) in both MIDI specifications is an attempt to model these performance enhancements built into other types of instruments.

I put a lot of the blame for this situation onto the ignorance of MIDI by guitarists. Most guitar players put themselves into a similar perceptual box. They simply do not consider the ability to play a hardware or software synth or sampler as within their pervue.

Totally accurate guitar pitch to MIDI in real time is non-existent. That is an argument that keyboard players can correctly make and win.

However, the technology to make real time accurate stringed instrument pitch to MIDI already exists.

Look at the Source Audio C4 pedal. It tracks a monophonic analog input from a guitar string’s audio stream with extremely fast and accurate output to its synth electronics input. Of course, the details of how it accomplishes this feat is proprietary. Source Audio isn’t saying what the output into the pedal’s oscillator provides. It is likely ADSR, gate, and pitch CV, based on the editor screenshots Source Audio has provided.

As we all know these analog signals are easily converted to MIDI.

Note: this pedal has not been released yet. However, the demos show that the tech does what it needs to do; providing fast and accurate tracking output from a guitar to a synth oscillator.

Note 2: the Korg MS-20 had a mono input which converted a guitar’s output into an ability to drive the oscillators. It wasn’t fast enough for any serious real time applications, but with the Source Audio pedal it is obvious that the technology has been radically improved.

So if we assume that it is analog circuitry controlling these parameters, it is reasonable to conclude that this technology could become the basis for fast and accurate polyphonic pitch to MIDI.

How do we go from mono to poly? It’s simple. Remember the physics of a stringed instrument. It is a parallel set of serial pitch devices. So for n strings, set up n channels of this technology in parallel.

The only other requirement to go polyphonic has been met. You need an n-channel guitar pickup so that each string provides its own audio stream. That has been done by multiple manufacturers. Take a look at the Cycfi Nu2 Multi for a great example. It is already for sale.

I find it ironic that analog control technology (CV) which was originally developed to provide control for keyboards might provide the basis for poly guitar controller technology.

So to answer your question, “who needs MIDI 2.0?”, I say…

Guitarists absolutely need MIDI 2.0 to provide enough resolution to cover the nuanced performances built into the physics of their instruments. They just don’t know it yet.

And yet…. Rachmaninoff. Art Tatum. Herbie Hancock. Beethoven. Non-expressive? If you find that you need more axes of control to achieve expressivity, I submit the fault lies with your imagination and technique, not with the so-called ‘restrictiveness’ of an instrument that has led the world as the main solo instrument choice of great composers and improvisers for hundreds of years.

The idea that more axes of control equals expressiveness is fundamentally a cop-out. Once MIDI 2.0 comes along, someone, somewhere will claim how limiting it is, and what we need is MIDI 3.0. Rinse and repeat.

Don’t get me wrong. I have no problem with the features that MIDI 2.0 will add. But I argue that, without widespread hardware adoption, whether by keyboards or electronic guitar controllers (how niche a market is that, LOL?), how widely will 2.0 take off..?

A standard is only as strong as its adoption. Proprietary standards (DMX and the like) that pre-dated MIDI sometimes had superior capabilities, but failed commercially because of various reasons. Not until 1981 and MIDI did a common standard emerge. And from Day One it was railed on by people that saw the control limitations. But despite that, it was accepted as the best of a bad deal and widely adopted by just about everybody (including early MIDI guitars).

Today’s scene is far more fractured, so many choosing to go their own way. Will MIDI 2.0 take off the way 1.0 did? It is going to take hardware AND software to succeed, and with the industry in chaos and R&D budgets reduced to people begging on Kickstarter for people to fund speculative products with no guarantee of delivery ( a total change from the 80’s juggernauts that funded MIDI 1.0’s explosion of gear that even today is widely emulated and commands premium used prices, so much for ‘restrictive expressiveness’!), I think extrapolating from MIDI 1.0’s early success is optimistic.

I’d love to see it adopted, but developing controllers (of any sort!) that can reliably track that resolution of input and output it reliably, and hardware capable of receiving it and not choking on the data density (a lot of affordable keyboards and modules cannot keep up with a fairly dense MIDI 1.0 stream without latency and other issues) is going to be expensive.

In the meantime, Every piece of ‘expressive’ music performed on piano and keyboards in the last 400 years has managed it despite the limitations. Limitations are good. Limitations foster focus. Limitations make for structure. Limitations somehow didn’t infuriate Chopin, Brahms, Chick Corea. If was good enough for them, it’s fine with me!

You’re missing the point. You can play piano expressively, as the great artists you pointed out have demonstrated, but a piano is fundamentally limited by design. Try playing microtonal compositions on piano. You’ll struggle. Now, there are MANY things that a physical piano can do that MIDI can’t – for example, you can hit a piano leg with a sledgehammer and record the result. MIDI has no analogue for that (although you could perhaps control a physical modelling synthesizer via MIDI to approximate the interaction).

Either way, I think the original point was that to understand the advantages of MIDI 2.0 we need to throw off the thresholds of conventional instrumentation.

Side Note: I’m in the “far too little and far too late” camp. MIDI 2.0 will exist but people will just keep using only the MIDI 1.0 functionality.

The larger problem is, will hardware manufacturers implement the additional resolution and capabilities of the new specification when so few will actually use it? In the software world it will vie with already established protocols, but in the real world there are these mythical beasts called musicians who actually play music rather than sitting at home in their bedroom studios. To a large degree they have not yet abandoned hardware keyboards because of reliability and latency issues. Will MIDI 2.0 appear in the keyboards they will be playing?

Imagine if midi 1.0 had been ratified but nobody made keyboards that used it… Would it have turned into the standard it is now?

Increased resolution comes at great cost to hardware manufacturers. Not to mention the question of how much R&D money they want to put into a capability such as bidirectional query that will rarely be used on stage or by the majority of customers of hardware.

Can MIDI 2.0 take off the way 1.0 did if it has little impact on hardware?

For me it’s all about the velocity resolution. First of all, you just can’t scale/shape the velocity curve without losing a terrible amount of resolution in certain areas, making it unplayable. Second: If you every played a high resolution midi keyboard with a capable sound engine you’ll instantly miss the better response on every other keyboard. Third: MIDI 1.0 timing is a mess.

Let’s say you’re sequencing drums for a rock demo. Most of the drums will be pretty loud strikes; lets say with a velocity of 100 or more. Thus, with a typical working range of 100-127, you have only 28 velocity levels to use for most of your composition.

Next, consider that these loudness increments, when they near 0db on your DAW, cause differences of nearly 1 db at best, and many db at the low end of the scale. Drum crescendos, e.g., especially gradual ones, will lack volume differences for many hits as the hits get louder; and the difference WILL be audible.

Who needs it? Musicians who don’t want their dynamics bastardized!